Monitor your AI stack with LLM observability

Detect risks, resolve issues, and keep your agentic and generative AI applications production-ready with Elastic Observability’s end-to-end monitoring capabilities.

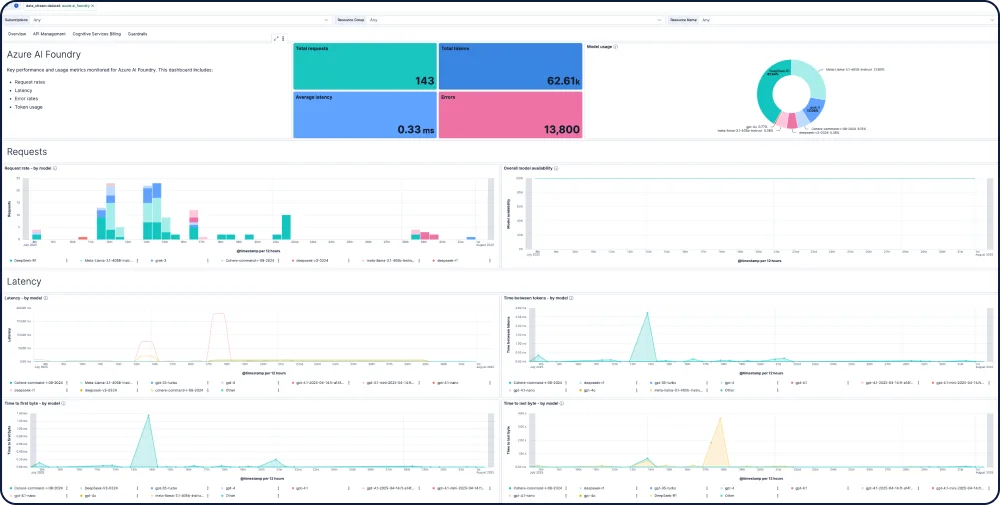

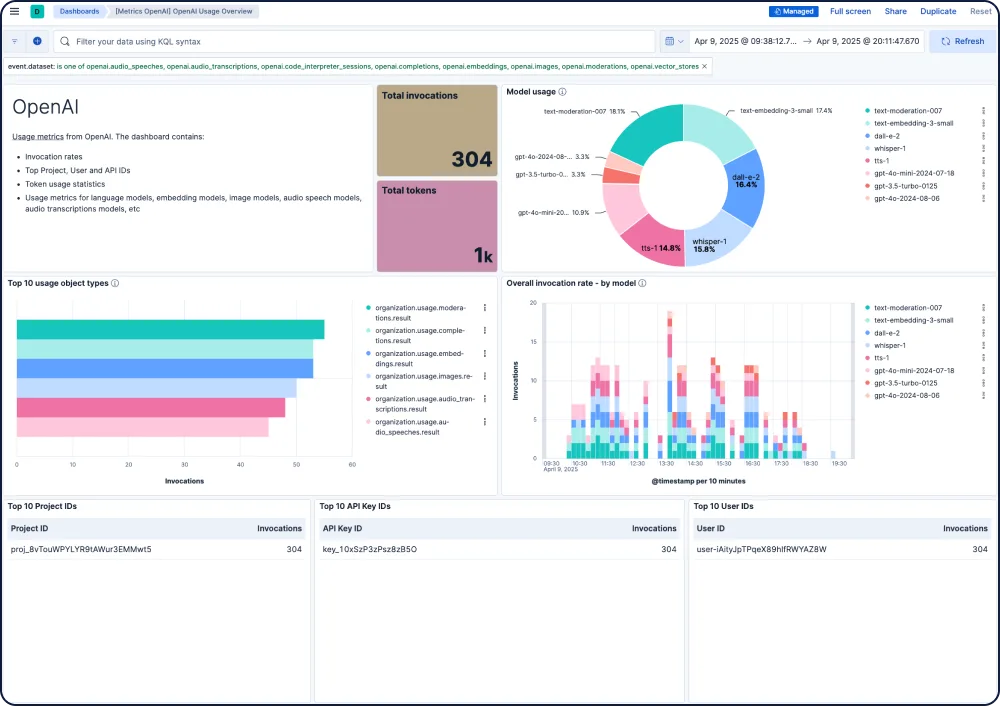

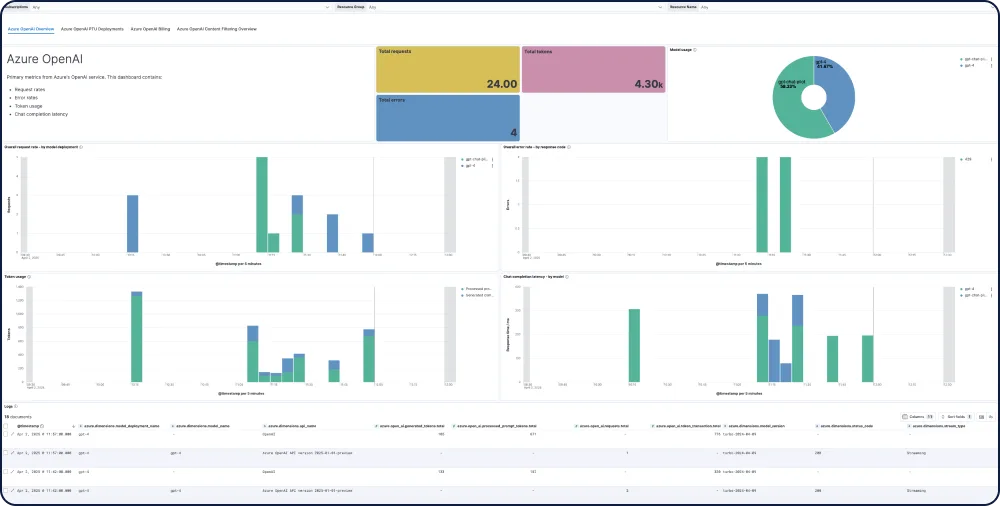

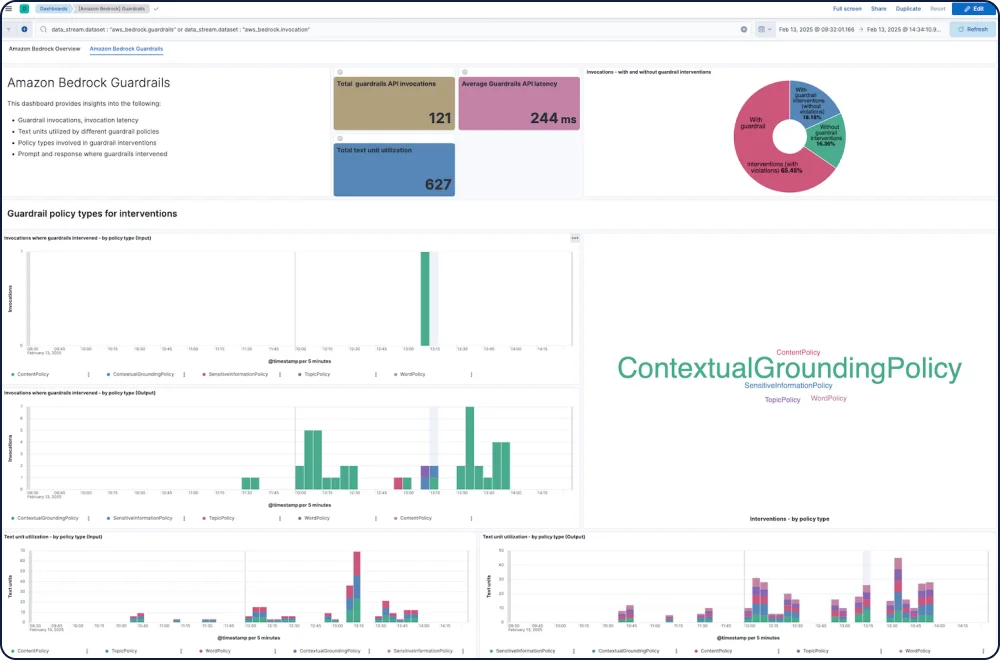

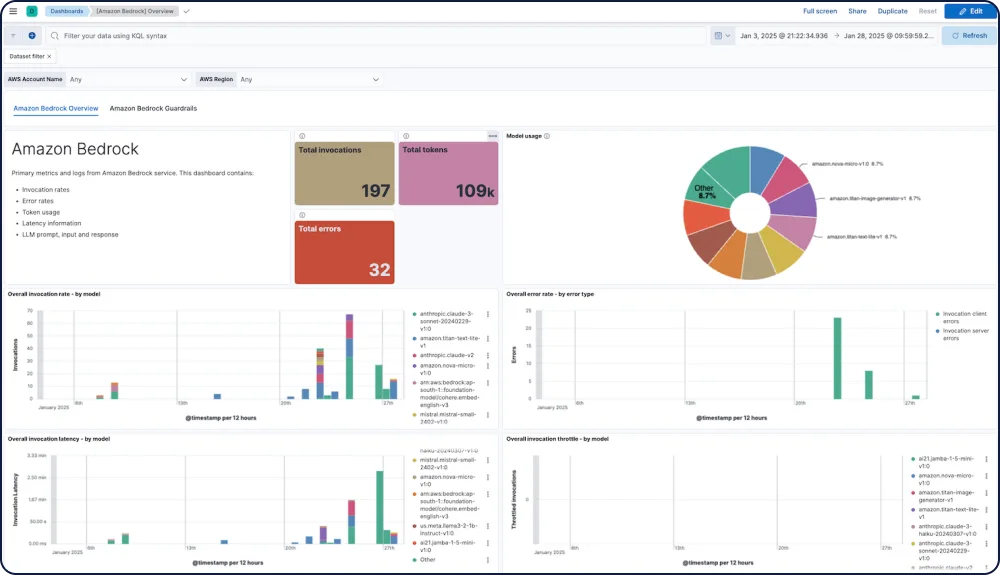

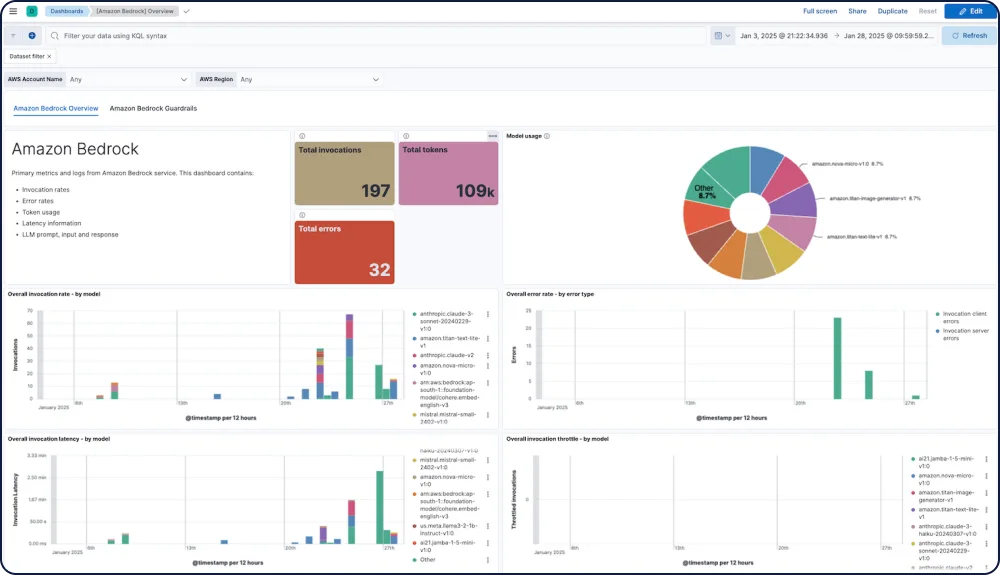

DASHBOARD GALLERY

Integrations and capabilities for your AI apps

Track performance, safety, and spend across every model.

The Amazon Bedrock integration for Elastic Observability provides comprehensive visibility into Amazon Bedrock LLM performance, usage, and safety, including for models from Anthropic, Mistral, and Cohere.

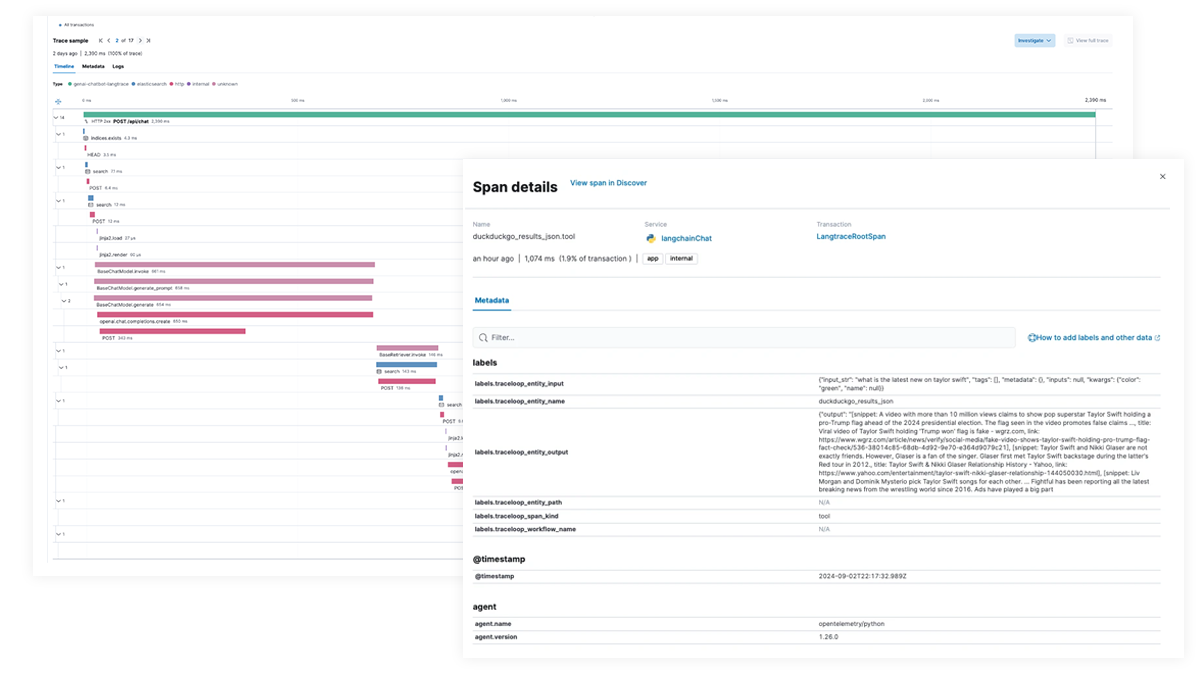

End-to-end tracing and debugging for AI apps and agentic workflows

Use Elastic APM to analyze and debug AI apps with OpenTelemetry, supported through Elastic Distributions of OpenTelemetry (EDOT) for Python, Java, and Node.js, as well as third-party tracing libraries like LangTrace, OpenLIT, and OpenLLMetry.

Try the Elastic chatbot RAG app yourself!

This sample app combines Elasticsearch, LangChain, and various LLMs to power a chatbot with ELSER and your private data.