Elastic named a Leader in the 2024 Gartner Magic Quadrant for Observability Platforms

Elastic has been named a Leader in the 2024 Gartner® Magic Quadrant™ for Observability Platforms. The need for observability platforms continues to evolve as operations teams deal with increased complexity and exponential data growth. Emerging trends like generative AI are driving a paradigm shift in proactive root cause detection and resolution.

We believe Elastic’s recognition as a Leader in the 2024 Gartner Magic Quadrant for Observability Platforms attests to the innovation that Elastic has delivered. With the evolution of AI, exponential growth in data complexity, and customers’ increased focus on business performance and continuity, Elastic is in a strong position to meet customers’ future observability needs while helping them control costs.

Abhishek Singh, General Manager, Elastic Observability

Elastic Observability, powered by Search AI, helps prevent outages through proactive insights and a no-compromise Search AI platform that enables customers to retain and use all their data. It improves operational efficiency with reduced costs while future-proofing an organization’s investment.

Let’s take a look at a few key considerations for an observability platform.

Observability is a data problem

As the complexity of applications and infrastructure grows, monitoring complex systems is driving the need for observability platforms to provide contextual insights from large amounts of complex data. It is increasingly becoming a critical requirement to have an observability platform that can ingest and retain logs, metrics, traces, profiling, and business data at high cardinality and dimensionality for a longer period of time. Retaining your data in full fidelity allows SRE teams to proactively analyze trends and patterns via analytics or machine learning (ML) capabilities to avoid service disruption and downtime.

As an organization's need for increased visibility grows, it is important to consider the broadest range of telemetry types to ensure full coverage. Given the exponential increase in the volume of data generated, solutions must be architected to surface relevant insights at scale.

Elastic’s full stack observability solution enables end-to-end visibility to help drive tool consolidation and avoid swivel chairing, resulting in faster root cause analysis. Additionally, the Elastic Common Schema and a schema-on-write approach provide unparalleled correlation and context across petabytes of indexed data with incredibly fast analytics.

AI is changing how observability is done

AI encompasses ML and the evolving generative AI. ML capabilities within observability drive anomaly detection for faster root cause analysis. However, ML-driven anomaly detection has limitations and often results in false alerts and manual troubleshooting due to the complexity and variety of data collected. The limitations include: data retention, uniqueness of telemetry data types, different data formats, and limitations of built-in ML models.

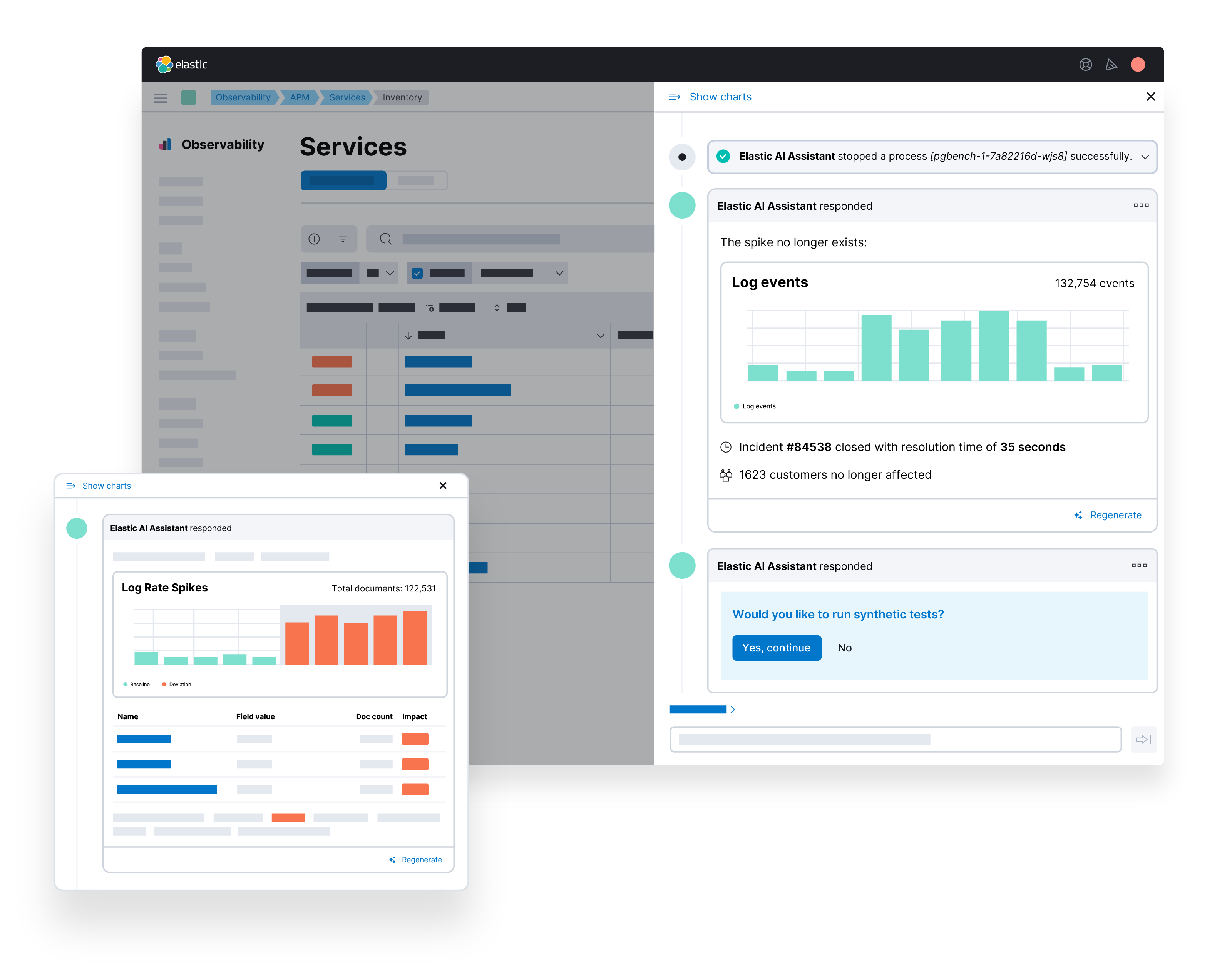

Generative AI is transforming observability from a manual, tedious process of data exploration and correlation for root cause identification — often requiring intervention from multiple experts — into a more intuitive and intelligent workflow-driven process for problem resolution. This advancement allows operations teams to understand system behavior and impact without needing in-depth knowledge, surfacing relevant insights that enable auto-detection, diagnosis, and remediation of issues.

While generative AI offers promise, it is also susceptible to hallucinations, and large language models (LLMs) without the context of private data can often be less meaningful or just plain misleading.

With Elastic Observability, we provide the fastest resolution, limitless retention, and unparalleled openness, delivering operational efficiency with reduced costs and unified insights, all powered by our proven Search AI. Leverage our context-aware troubleshooting to interactively and automatically surface root causes across petabytes of indexed and correlated data. Increase SRE productivity by effortlessly surfacing relevant and accurate insights with GenAI augmented by your private information.

In addition, gain proactive detection and remediation with both out-of-the-box and bring-your-own ML models applied to enriched and standardized telemetry across all data types via Elastic Common Schema for faster root cause analysis.

Full-stack observability shouldn’t be cost-prohibitive

An effective observability platform is dependent on its ability to ingest and retain all observability data for a longer duration without being cost-prohibitive. Often, operations and development teams have to compromise on multiple dimensions due to increasing costs from:

Complicated pricing schemes and SKUs where observability capabilities are priced separately, resulting in unforeseen costs

The need to increase data retention periods for each telemetry type to meet business needs, often incurring additional costs

An exponential increase in storage costs when adding and using necessary, custom metadata

One common solution is to only monitor Tier 1 applications in your environment, but these compromises can often lead to operational blind spots. The potential impact? Customers identifying performance issues before operations teams do, finger-pointing, along with slower problem identification and resolution.

With Elastic Observability, your organization can attain unmatched scale and analytics at reduced costs with a unified data store, high-performance data tiers, and distributed search delivering a single pane of glass experience. Eliminate monitoring blind spots cost-effectively by ingesting and storing high cardinality data with limitless retention.

An open observability platform built for the future

As organizations mature in their adoption of observability, there is an increasing need for observability platforms to be open and extensible. A key element of this strategy is the continuing maturity and adoption of OpenTelemetry (OTel), which offers operations teams the ability to make long-term decisions without being locked in with a specific vendor. Within OpenTelemetry, metrics, logs, and traces are capabilities ready for production adoption, with the recent introduction of continuous profiling as a potential fourth signal. OpenTelemetry offers an industry-leading path for standardization of ingest and retention of all your telemetry data.

With Elastic Observability, you can avoid vendor lock-in and future-proof investments with an open, extensible solution that seamlessly integrates with your ecosystem and delivers the most fully-functional OpenTelemetry capabilities. Whether you choose our flexible cloud or on-prem deployment model, Elastic Observability brings the power of Search AI to all of your telemetry data while also ensuring data privacy.

To find out more about why Elastic was named a Leader in the Gartner Magic Quadrant for Observability Platforms, download the report.

Gartner, Magic Quadrant for Observability Platforms,

Gregg Siegfried, Padraig Byrne, Mrudula Bangera, Matt Crossley, 12 August 2024.

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally, and MAGIC QUADRANT is a registered trademark of Gartner, Inc. and/or its affiliates and are used herein with permission. All rights reserved.

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation. Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.

_________________________________________________________________________________________________

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, elasticsearch, ESRE, elasticsearch Relevance Engine and associated marks are trademarks, logos or registered trademarks of elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.