Context-aware insights using the Elastic AI Assistant for Observability

Elastic® is extending the Elastic AI Assistant — the open, generative AI Assistant powered by the Elasticsearch Relevance Engine™ (ESRE) — to enhance observability analysis and enable users of every skill level. Elastic’s AI Assistant (in technical preview for Observability) transforms problem identification and resolution and eliminates manual data chasing across silos with an interactive assistant that delivers context-aware information for SREs.

The Elastic AI Assistant enhances the understanding of application errors, log message interpretation, alert analysis, and suggestions for optimal code efficiency. Additionally, Elastic AI Assistant’s interactive chat interface allows SREs to chat and visualize all relevant telemetry cohesively in one place, while also leveraging proprietary data and runbooks for additional context.

While the AI Assistant is configured to connect to your LLM of choice, such as OpenAI or Azure OpenAI, Elastic also allows users to provide private information to the AI Assistant. Private information can include items such as runbooks, a history of past incidents, case history, and more. Using an inference processor powered by the Elastic Learned Sparse EncodeR (ELSER), the Assistant gets access to the most relevant data for answering specific questions or performing tasks.

The AI Assistant can learn and grow its knowledge base with continued use. SREs can teach the assistant about a specific problem so that the assistant can provide support for the scenario in the future and can assist in composing outage reports, updating runbooks, and enhancing automated root cause analysis. SREs can pinpoint and resolve issues faster and more proactively through the combined power of the Elastic AI Assistant and machine learning capabilities by eliminating manual data retrieval across silos, effectively turbocharging AIOps.

In this blog, we will provide a review of some of the initial features available in technical preview in 8.10:

- Chat capability available on any screen in Elastic.

- Specific prebuilt LLM queries to obtain more contextual information for:

- Understanding the meaning of the message in a log

- Get better options in managing errors from services Elastic APM is managing

- Identify more context and how to potentially manage it for log alerts

- Understanding how to optimize code in Universal Profiling

- Understanding what specific processes are in hosts being monitored in Elastic

Additionally, you can check out the following video, which is a review of our features in this particular scenario.

Elastic AI Assistant for Observability

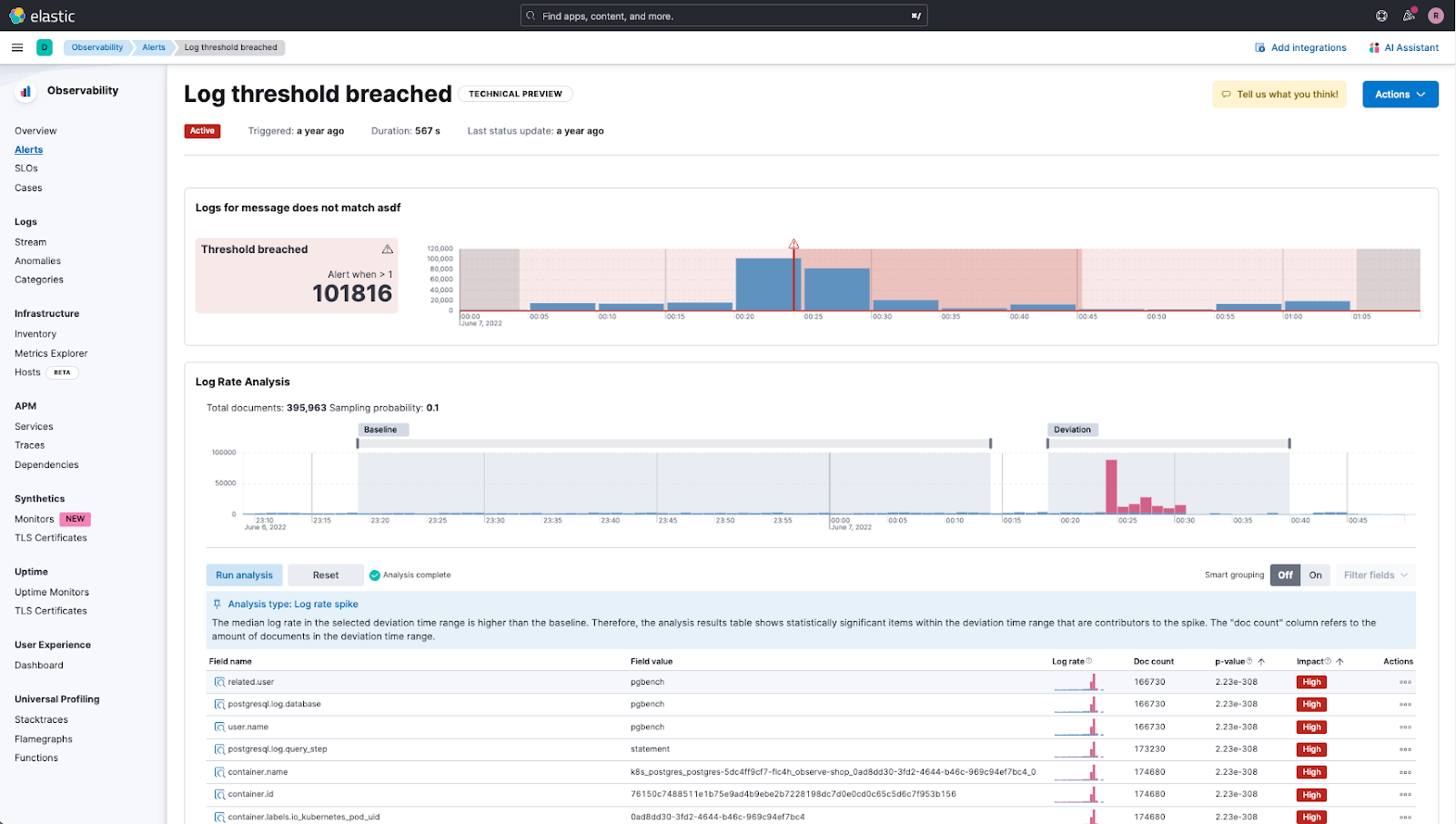

As an SRE, you receive an alert, which is related to a threshold being crossed for the number of log entries. In Elastic Observability, you not only get the alert, but Elastic Observability adds relevant log spike analysis in real time to better assist you in understanding the issue.

Using AI Assistant to analyze an issue

However, as an SRE you are not necessarily in depth in understanding all the systems you are responsible for and need more help analyzing the log spike analysis. This is where the AI Assistant can help.

Elastic pre-builds a prompt which is sent to your configured LLM, and you get not only the description and context of the issue, but also some recommendations on how to proceed.

Additionally you can launch a chat with the AI Assistant, and go deeper into your investigation.

As you saw in the above clip, not only can you get further information about the issue “pgbench” but you can also investigate how this impacts your business by obtaining internal organizational information. In this case, we understood:

- How the significant change in transaction throughput rate could affect our revenue

- How revenue dropped when this log spike occurred

Both of these information components were obtained through our use of ELSER that helps retrieve organizational (private) data to help answer specific questions, which LLMs won’t ever be trained on.

AI Assistant chat interface

So what can you achieve with the new AI Assistant chat interface? The chat capability allows you to have a session/conversation with the Elastic AI Assistant, enabling you to:

- Use a natural language interface such as “Are there any alerts related to this service today?” or “Can you explain what these alerts are?” as part of problem determination and root cause analysis processes

- Offer conclusions and context, and suggest next steps and recommendations from your own internal private data (powered by ELSER), as well as by information available in the connected LLM

- Analyze responses from queries and output from analysis performed by the Elastic AI Assistant

- Recall and summarize information throughout the conversation

- Generate Lens visualizations via conversation

- Execute Kibana® and Elasticsearch® APIs on behalf of the user through the chat interface

- Perform root cause analysis using specific APM functions such as: get_apm_timeseries, get_apm_service_summary, get_apm_error_docuements, get_apm_correlations, get_apm_downstream_dependencies, etc.

Other locations to access AI Assistant

The chat capability in Elastic AI Assistant for Observability will initially be accessible from several locations in Elastic Observability 8.10, and additional access points will be added to future releases.

- All Observability applications have a button in the top action menu to open the AI Assistant and start a conversation:

- Users can access existing conversations and create new ones by clicking the Go to conversations link in the AI Assistant.

The new chat capability in Elastic AI Assistant for Observability enhances the capabilities introduced in Elastic Observability 8.9 by providing the ability to start a conversation related to the insights already being provided by the Elastic AI Assistant in APM, logging, hosts, alerting, and profiling.

For example:

- In APM, it explains the meaning of a specific error or exception and offers common causes and possible impacts. Additionally, you can investigate it further with a conversation.

- In logging, the AI Assistant takes the log and helps you understand the message with contextual information, and additionally you can investigate further with a conversation.

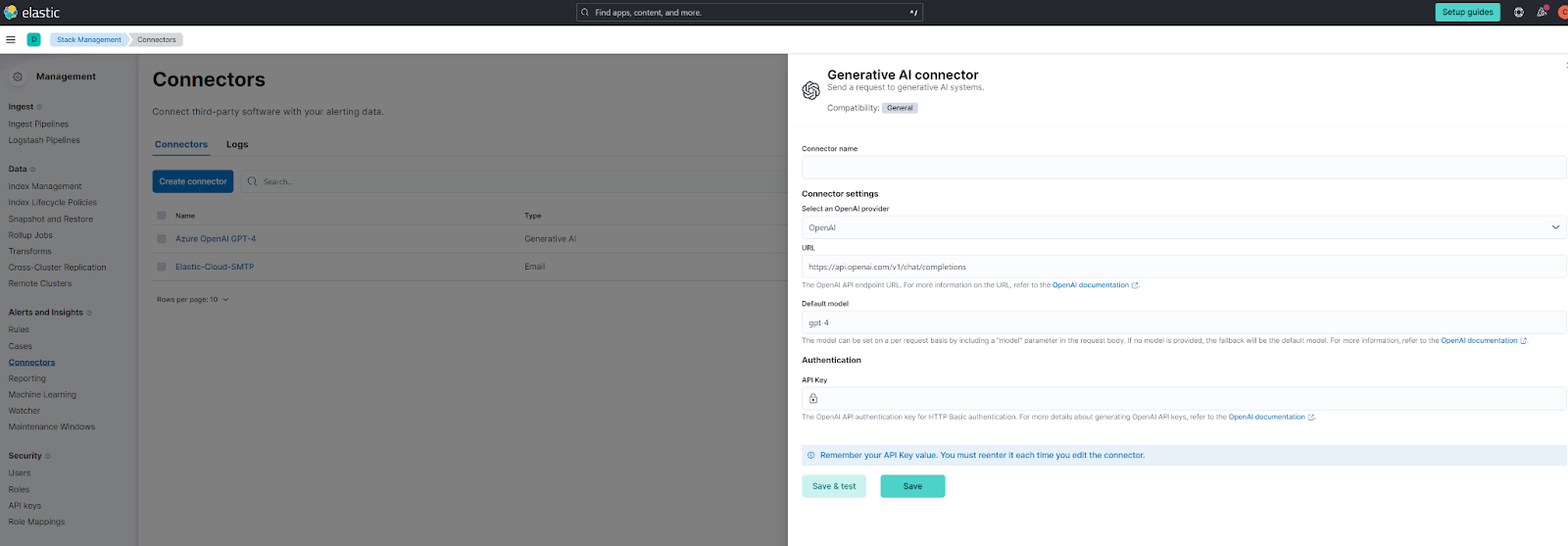

Configuring the AI Assistant

Details on how to configure the Elastic AI Assistant can be found in the Elastic documentation.

It is Connector-based and will initially support configuration for OpenAI and Azure OpenAI. Note that the use of GPT-4 is recommended, and support for function calling is a requirement. If you’re using OpenAI directly, you will have these capabilities automatically. If you are using the Azure version, make sure to deploy one of the more recent models according to the Azure documentation.

Additionally, it can be configured using yaml as a pre-configured connector:

xpack.actions.preconfigured:

open-ai:

actionTypeId: .gen-ai

name: OpenAI

config:

apiUrl: https://api.openai.com/v1/chat/completions

apiProvider: OpenAI

secrets:

apiKey: <myApiKey>

azure-open-ai:

actionTypeId: .gen-ai

name: Azure OpenAI

config:

apiUrl: https://<resourceName>.openai.azure.com/openai/deployments/<deploymentName>/chat/completions?api-version=<apiVersion>

apiProvider: Azure OpenAI

secrets:

apiKey: <myApiKey>The feature is only available to users with an Enterprise license. The Elastic AI Assistant for Observability is in technical preview and is licensed as an Enterprise feature. If you are not an Elastic Enterprise user, please upgrade for access to the Elastic AI Assistant for Observability.

Your feedback is important to us! Please use the feedback form available in the Elastic AI Assistant, Discuss forums (tagged with AI Assistant or AI operations), and/or Elastic Community Slack (#observability-ai-assistant).

Lastly we did want to point out that this feature is still in technical preview. Due to the nature of large language models, the assistant may do unexpected things at times. It may not react the same way even if the situation is the same. Sometimes it also tells you that it’s unable to help you, even though it should be capable of answering the question you asked it.

We are working on making the assistant more robust against failures, more predictable, and overall better, so make sure to follow future releases.

Try it out

Read about these capabilities and more on our Elastic AI Assistant page or in the release notes.

Existing Elastic Cloud customers can access many of these features directly from the Elastic Cloud console. Not taking advantage of Elastic on cloud? Start a free trial.

The release and timing of any features or functionality described in this post remain at Elastic's sole discretion. Any features or functionality not currently available may not be delivered on time or at all.

In this blog post, we may have used or referred to third party generative AI tools, which are owned and operated by their respective owners. Elastic does not have any control over the third party tools and we have no responsibility or liability for their content, operation or use, nor for any loss or damage that may arise from your use of such tools. Please exercise caution when using AI tools with personal, sensitive or confidential information. Any data you submit may be used for AI training or other purposes. There is no guarantee that information you provide will be kept secure or confidential. You should familiarize yourself with the privacy practices and terms of use of any generative AI tools prior to use.

Elastic, Elasticsearch, ESRE, Elasticsearch Relevance Engine and associated marks are trademarks, logos or registered trademarks of Elasticsearch N.V. in the United States and other countries. All other company and product names are trademarks, logos or registered trademarks of their respective owners.