In the previous post, we discovered what LangChain4j is and how to:

- Have a discussion with LLMs by implementing a

ChatLanguageModeland aChatMemory - Retain chat history in memory to recall the context of a previous discussion with an LLM

This blog post is covering how to:

- Create vector embeddings from text examples

- Store vector embeddings in the Elasticsearch embedding store

- Search for similar vectors

Create embeddings

To create embeddings, we need to define an EmbeddingModel to use. For example, we can use the same mistral model we used in the previous post. It was running with ollama:

EmbeddingModel model = OllamaEmbeddingModel.builder()

.baseUrl(ollama.getEndpoint())

.modelName(MODEL_NAME)

.build();A model is able to generate vectors from text. Here we can check the number of dimensions generated by the model:

Logger.info("Embedding model has {} dimensions.", model.dimension());

// This gives: Embedding model has 4096 dimensions.To generate vectors from a text, we can use:

Response<Embedding> response = model.embed("A text here");Or if we also want to provide Metadata to allow us filtering on things like text, price, release date or whatever, we can use Metadata.from(). For example, we are adding here the game name as a metadata field:

TextSegment game1 = TextSegment.from("""

The game starts off with the main character Guybrush Threepwood stating "I want to be a pirate!"

To do so, he must prove himself to three old pirate captains. During the perilous pirate trials,

he meets the beautiful governor Elaine Marley, with whom he falls in love, unaware that the ghost pirate

LeChuck also has his eyes on her. When Elaine is kidnapped, Guybrush procures crew and ship to track

LeChuck down, defeat him and rescue his love.

""", Metadata.from("gameName", "The Secret of Monkey Island"));

Response<Embedding> response1 = model.embed(game1);

TextSegment game2 = TextSegment.from("""

Out Run is a pseudo-3D driving video game in which the player controls a Ferrari Testarossa

convertible from a third-person rear perspective. The camera is placed near the ground, simulating

a Ferrari driver's position and limiting the player's view into the distance. The road curves,

crests, and dips, which increases the challenge by obscuring upcoming obstacles such as traffic

that the player must avoid. The object of the game is to reach the finish line against a timer.

The game world is divided into multiple stages that each end in a checkpoint, and reaching the end

of a stage provides more time. Near the end of each stage, the track forks to give the player a

choice of routes leading to five final destinations. The destinations represent different

difficulty levels and each conclude with their own ending scene, among them the Ferrari breaking

down or being presented a trophy.

""", Metadata.from("gameName", "Out Run"));

Response<Embedding> response2 = model.embed(game2);If you'd like to run this code, please checkout the Step5EmbedddingsTest.java class.

Add Elasticsearch to store our vectors

LangChain4j provides an in-memory embedding store. This is useful to run simple tests:

EmbeddingStore<TextSegment> embeddingStore = new InMemoryEmbeddingStore<>();

embeddingStore.add(response1.content(), game1);

embeddingStore.add(response2.content(), game2);But obviously, this could not work with much bigger dataset because this datastore stores everything in memory and we don't have infinite memory on our servers. So, we could instead store our embeddings into Elasticsearch which is by definition "elastic" and can scale up and out with your data. For that, let's add Elasticsearch to our project:

<dependency>

<groupId>dev.langchain4j</groupId>

<artifactId>langchain4j-elasticsearch</artifactId>

<version>${langchain4j.version}</version>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>elasticsearch</artifactId>

<version>1.20.1</version>

<scope>test</scope>

</dependency>As you noticed, we also added the Elasticsearch TestContainers module to the project, so we can start an Elasticsearch instance from our tests:

// Create the elasticsearch container

ElasticsearchContainer container =

new ElasticsearchContainer("docker.elastic.co/elasticsearch/elasticsearch:8.15.0")

.withPassword("changeme");

// Start the container. This step might take some time...

container.start();

// As we don't want to make our TestContainers code more complex than

// needed, we will use login / password for authentication.

// But note that you can also use API keys which is preferred.

final CredentialsProvider credentialsProvider = new BasicCredentialsProvider();

credentialsProvider.setCredentials(AuthScope.ANY, new UsernamePasswordCredentials("elastic", "changeme"));

// Create a low level Rest client which connects to the elasticsearch container.

client = RestClient.builder(HttpHost.create("https://" + container.getHttpHostAddress()))

.setHttpClientConfigCallback(httpClientBuilder -> {

httpClientBuilder.setDefaultCredentialsProvider(credentialsProvider);

httpClientBuilder.setSSLContext(container.createSslContextFromCa());

return httpClientBuilder;

})

.build();

// Check the cluster is running

client.performRequest(new Request("GET", "/"));To use Elasticsearch as an embedding store, you "just" have to switch from the LangChain4j in-memory datastore to the Elasticsearch datastore:

EmbeddingStore<TextSegment> embeddingStore =

ElasticsearchEmbeddingStore.builder()

.restClient(client)

.build();

embeddingStore.add(response1.content(), game1);

embeddingStore.add(response2.content(), game2);This will store your vectors in Elasticsearch in a default index. You can also change the index name to something more meaningful:

EmbeddingStore<TextSegment> embeddingStore =

ElasticsearchEmbeddingStore.builder()

.indexName("games")

.restClient(client)

.build();

embeddingStore.add(response1.content(), game1);

embeddingStore.add(response2.content(), game2);If you'd like to run this code, please checkout the Step6ElasticsearchEmbedddingsTest.java class.

Search for similar vectors

To search for similar vectors, we first need to transform our question into a vector representation using the same model we used previously. We already did that, so it's not hard to do this again. Note that we don't need the metadata in this case:

String question = "I want to pilot a car";

Embedding questionAsVector = model.embed(question).content();We can build a search request with this representation of our question and ask the embedding store to find the first top vectors:

EmbeddingSearchResult<TextSegment> result = embeddingStore.search(

EmbeddingSearchRequest.builder()

.queryEmbedding(questionAsVector)

.build());We can iterate over the results now and print some information, like the game name which is coming from the metadata and the score:

result.matches().forEach(m -> Logger.info("{} - score [{}]",

m.embedded().metadata().getString("gameName"), m.score()));As we could expect, this gives us "Out Run" as the first hit:

Out Run - score [0.86672974]

The Secret of Monkey Island - score [0.85569763]If you'd like to run this code, please checkout the Step7SearchForVectorsTest.java class.

Behind the scene

The default configuration for the Elasticsearch Embedding store is using the approximate kNN query behind the scene.

POST games/_search

{

"query" : {

"knn": {

"field": "vector",

"query_vector": [-0.019137882, /* ... */, -0.0148779955]

}

}

}But this could be changed by providing another configuration (ElasticsearchConfigurationScript) than the default one (ElasticsearchConfigurationKnn) to the Embedding store:

EmbeddingStore<TextSegment> embeddingStore =

ElasticsearchEmbeddingStore.builder()

.configuration(ElasticsearchConfigurationScript.builder().build())

.indexName("games")

.restClient(client)

.build();The ElasticsearchConfigurationScript implementation runs behind the scene a script_score query using a cosineSimilarity function.

Basically, when calling:

EmbeddingSearchResult<TextSegment> result = embeddingStore.search(

EmbeddingSearchRequest.builder()

.queryEmbedding(questionAsVector)

.build());This now calls:

POST games/_search

{

"query": {

"script_score": {

"script": {

"source": "(cosineSimilarity(params.query_vector, 'vector') + 1.0) / 2",

"params": {

"queryVector": [-0.019137882, /* ... */, -0.0148779955]

}

}

}

}

}In which case the result does not change in term of "order" but just the score is adjusted because the cosineSimilarity call does not use any approximation but compute the cosine for each of the matching vectors:

Out Run - score [0.871952]

The Secret of Monkey Island - score [0.86380446]If you'd like to run this code, please checkout the Step7SearchForVectorsTest.java class.

Conclusion

We have covered how easily you can generate embeddings from your text and how you can store and search for the closest neighbours in Elasticsearch using 2 different approaches:

- Using the approximate and fast

knnquery with the defaultElasticsearchConfigurationKnnoption - Using the exact but slower

script_scorequery with theElasticsearchConfigurationScriptoption

The next step will be about building a full RAG application, based on what we learned here.

Ready to try this out on your own? Start a free trial.

Elasticsearch has integrations for tools from LangChain, Cohere and more. Join our advanced semantic search webinar to build your next GenAI app!

Related content

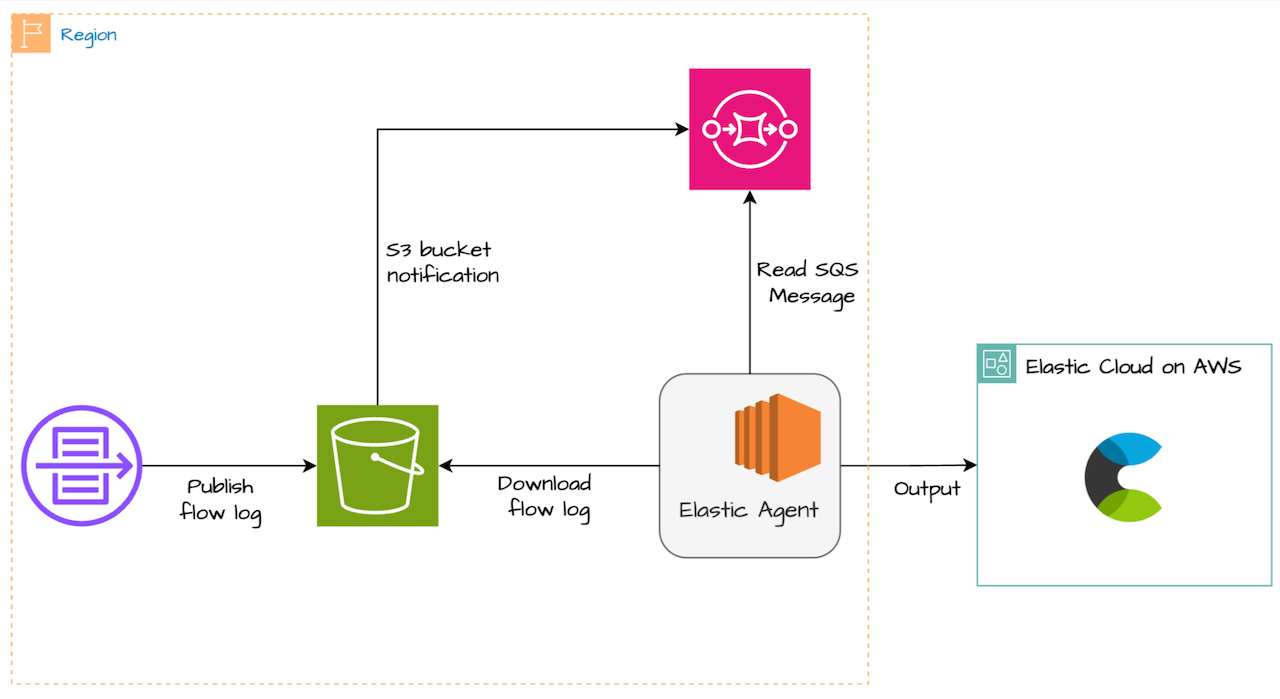

How to ingest data from AWS S3 into Elastic Cloud - Part 2 : Elastic Agent

Learn about different options to ingest data from AWS S3 into Elastic Cloud.

October 9, 2024

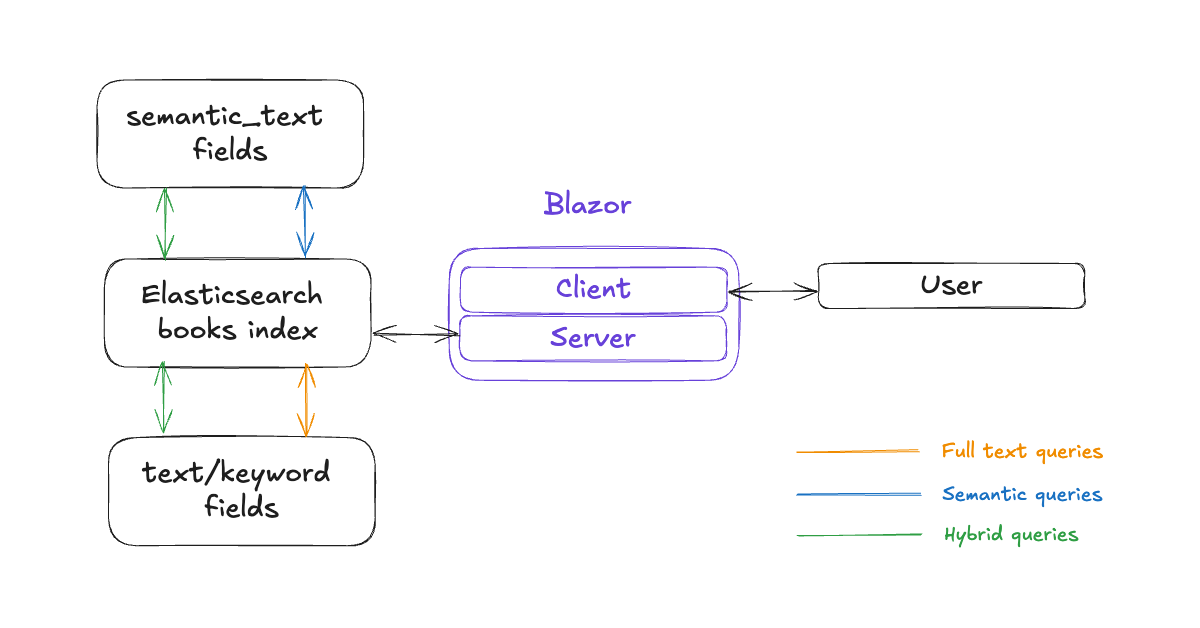

Building a search app with Blazor and Elasticsearch

Learn how to build a search application using Blazor and Elasticsearch, and how to use the Elasticsearch .NET client for hybrid search.

October 4, 2024

Using Eland on Elasticsearch Serverless

Learn how to use Eland on Elasticsearch Serverless

How to ingest data from AWS S3 into Elastic Cloud - Part 1 : Elastic Serverless Forwarder

Learn about different ways you can ingest data from AWS S3 into Elastic Cloud

September 23, 2024

Introducing LangChain4j to simplify LLM integration into Java applications

LangChain4j (LangChain for Java) is a powerful toolset to build your RAG application in plain Java.