About cookies on this site Our websites require some cookies to function properly (required). In addition, other cookies may be used with your consent to analyze site usage, improve the user experience and for advertising. For more information, please review your options. By visiting our website, you agree to our processing of information as described in IBM’sprivacy statement. To provide a smooth navigation, your cookie preferences will be shared across the IBM web domains listed here.

Tutorial

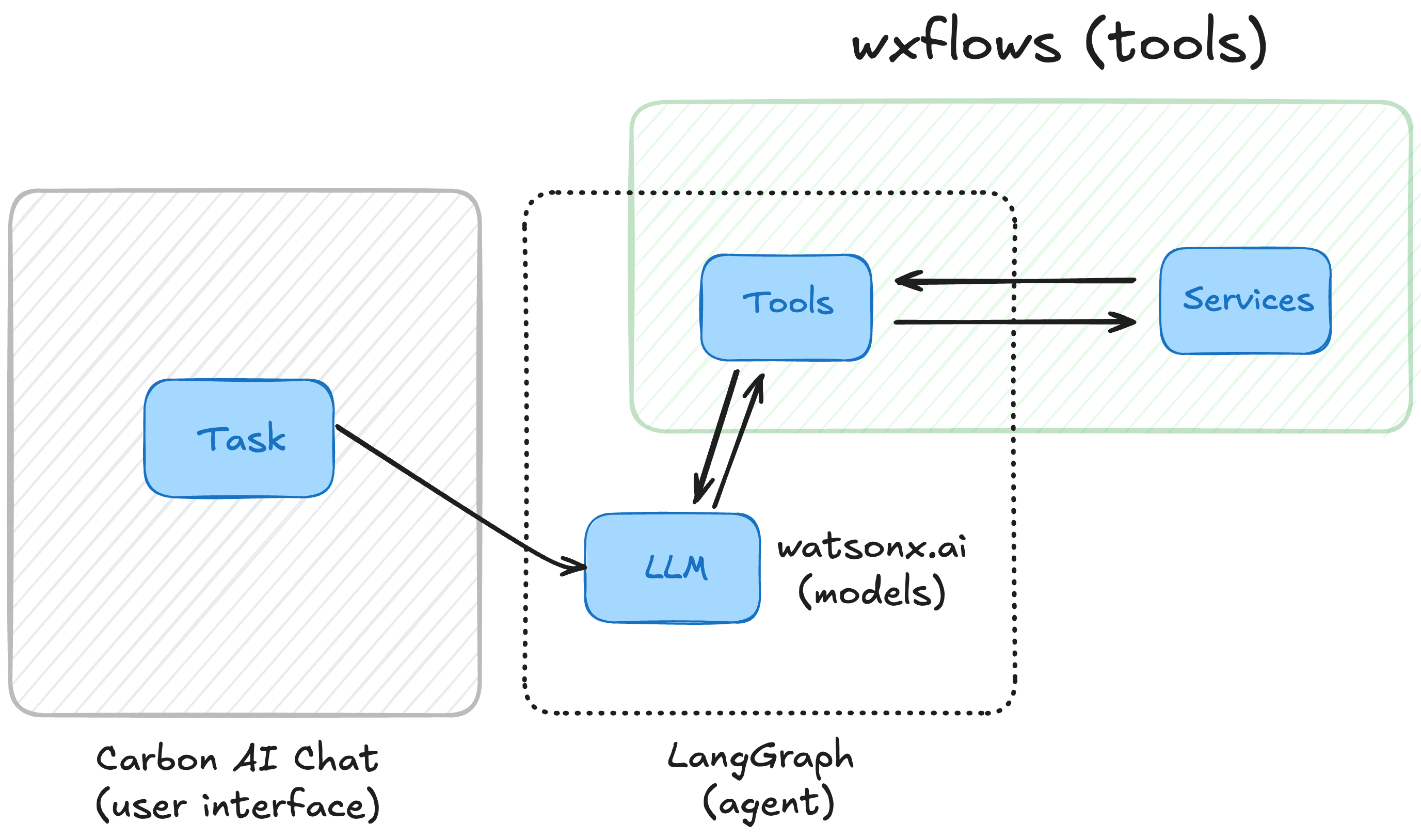

Build a tool calling agent with LangGraph and watsonx.ai flows engine

Learn how to create an AI-powered agent that uses tool-calling to retrieve real-time data with LangGraph and watsonx.ai flows engine

Generative AI is evolving quickly, and in the past couple of years, we’ve seen a few clear patterns that a developer can implement, starting with simple prompting, moving to Retrieval Augmented Generation (RAG), and now tool-calling and agents.

Building applications around agents often involves connecting different tools, models, and APIs. In this tutorial, you’ll learn how to set up and run an AI agent using watsonx.ai flows engine and LangGraph, with help from models powered by IBM’s watsonx.ai platform. This guide will take you step by step through installing the tools you need, deploying your tools to flows engine, and running a chat application on your own machine.

Tool calling and agents

Tool calling allows large language models (LLMs) to work with external tools by following user-defined instructions. The model figures out the required inputs for a tool, and the tool does the actual work. This is great for turning existing data into structured information or linking tools into workflows.

Flows engine makes this process easier by offering ways to turn your existing data sources such as databases and APIs into tools, and then connect to those tools from different frameworks for building AI applications such as LangChain and LangGraph.

Agents are like AI helpers built to handle specific tasks. To complete these tasks they rely on tool calling. This allows them to access external tools, process information, and expand the exsisting knowledge of the LLM to fit the user’s needs.

There are several popular frameworks for building agents, either in JavaScript or Python. In this tutorial, we'll be using LangGraph, which builds on LangChain, to create an agent that is able to call tools that can retrieve information from Google books and Wikipedia. The tools will be defined in flows engine, and connected through the JavaScript SDK.

Build a tool calling agent

In this section, you'll learn how to set up and run an AI agent using watsonx.ai flows engine (wxflows) and LangGraph. The agent will be able to call several tools to answer questions about your favorite books or other popular media using a set of prebuilt community tools. Of course, you can also convert your own data sources (such as databases and APIs) into tools. We'll cover everything you need to know, from installing the tools to deploying and running the app on your machine.

This example includes the following technologies:

- LangGraph SDK (for the agent)

- LangChain SDK watsonx.ai extension (for the models)

wxflowsCLI + SDK (for the tools)- Carbon AI Chat (for the user interface)

Note: We'll be using the watsonx.ai Chat Model from LangChain, but you can use any of the supported chat models.

Next, we'll start installing the wxflows CLI, set up your flows engine project, and run the agent using a chat application. We’ll use google_books and wikipedia as examples of tools that the agent can call.

Prerequisites

Before you begin, create an account for watsonx.ai Flows Engine for this tutorial:

- Sign up for a free watsonx.ai flows engine account.

- Sign up for the free watsonx.ai trial to access models. Alternatively, you can use Ollama to run a LLM locally.

- You need to have Node.js (version 18 or higher) installed.

Steps

Let's get started on building your first tool calling agent. Complete the following steps:

Step 1: Install the wxflows CLI

To install the wxflows CLI for Node.js, you need to have Node.js (version 18 or higher) installed on your machine.

- Download the CLI from the installation page.

- Create a new folder on your computer.

mkdir wxflows-project cd wxflows-project Inside this folder, run:

npm i -g ./wxflows-0.2.0-experimental.a3d66e7d.tgzUse the exact name of the

.tgzfile you downloaded, in the above case itswxflows-0.2.0-experimental.a3d66e7d.tgzbut it could be different in your case.Log in to the CLI by following the login instructions.

wxflows login

After setting up the CLI for watsonx.ai flows engine, you'll create an endpoint with the Wikipedia and Google books tools.

Step 2: Set up a watsonx.ai flows engine project

You'll set up a new wxflows project that will include two tools:

google_books: Used to search and retrieve information from Google books.wikipedia: Used to query and retrieve information from Wikipedia.

To initialize the project you should run the following command:

wxflows init --endpoint api/chat-app-example

This defines an endpoint called api/chat-app-example, and after initializing the project you can proceed by importing the tools:

First, import the Google books tool:

wxflows import tool https://raw.githubusercontent.com/IBM/wxflows/refs/heads/main/tools/google_books.zip

Then, import the Wikipedia tool:

wxflows import tool https://raw.githubusercontent.com/IBM/wxflows/refs/heads/main/tools/wikipedia.zip

Running the above commands will create several .graphql files in your project directory, where the file tools.graphql contains the tool definitions for both tools. In this configuration file you can find the definitions for the google_books and wikipedia tools with their descriptons:

extend type Query {

wikipediaTool: TC_GraphQL

@supplies(query: "tc_tools")

@materializer(

query: "tc_graphql_tool"

arguments: [

{ name: "name", const: "wikipedia" }

{ name: "description", const: "Retrieve information from Wikipedia." }

{ name: "fields", const: "search|page" }

]

)

google_books: TC_GraphQL

@supplies(query: "tc_tools")

@materializer(

query: "tc_graphql_tool"

arguments: [

{ name: "name", const: "google_books" }

{

name: "description"

const: "Retrieve information from Google books. Find books by search string, for example to search for Daniel Keyes 'Flowers for Algernon' use q: 'intitle:flowers+inauthor:keyes'"

}

{ name: "fields", const: "books|book" }

]

)

}

You can make changes to the tool name or description, or use the values generated while importing the tools.

To deploy the endpoint with the tool definitions, you should use the following CLI command:

wxflows deploy

Deploying the endpoint should only take a couple of seconds, and the wxflows SDK will use this endpoint to retrieve and call tools, as you'll learn in the next section.

Step 3: Use LangGraph to create an agent

Now that we have a flows engine endpoint, we need to create an agent that can use this endpoint for tool calling. We'll be using LangGraph for this, together with the wxflows SDK and the LangChain extension for watsonx.ai:

- Create a new directory:

mkdir langgraph cd langgraph In this directory, run the following command to set up a new TypeScript project and to install the needed dependencies:

npm init -y npm i @langchain/langgraph @langchain/core @langchain/community dotenv typescript @wxflows/sdk@betaThis installs the following packages:

@langchain/langgraph: Build agents, structured workflows and handle tool calling.@langchain/core: Provides core functionality for building and managing AI-driven workflows.@langchain/community: Provides access to experimental and community-contributed integrations and tools, such as models on the watsonx.ai platform.dotenv: Loads environment variables from a.envfile, making it easier to manage API keys and configuration values.typescript: Sets up TypeScript, a superset of JavaScript, for type checking and better code quality.@wxflows/sdk@beta: Includes the SDK for working with watsonx.ai flows engine, which allows you to deploy and manage tools.

Your project is now ready to start building an agent.

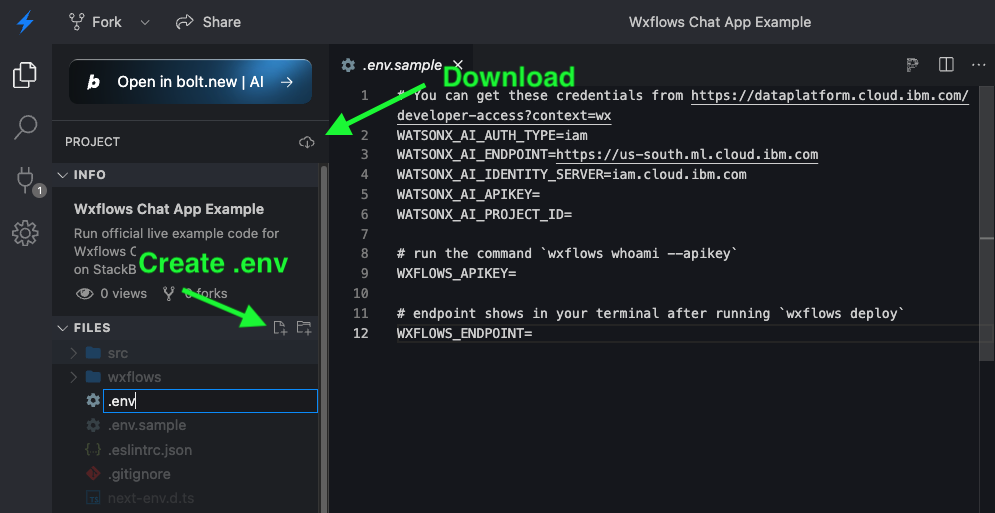

Create an

.envfile with the following values:# You can get these credentials from https://dataplatform.cloud.ibm.com/developer-access?context=wx WATSONX_AI_AUTH_TYPE=iam WATSONX_AI_ENDPOINT=https://us-south.ml.cloud.ibm.com WATSONX_AI_IDENTITY_SERVER=iam.cloud.ibm.com WATSONX_AI_APIKEY= WATSONX_AI_PROJECT_ID= # run the command 'wxflows whoami --apikey' WXFLOWS_APIKEY= # endpoint shows in your terminal after running 'wxflows deploy' WXFLOWS_ENDPOINT=After creating the

.envfile, create a new file calledindex.tsand add the code for LangGraph:import { AIMessage, BaseMessage, HumanMessage, SystemMessage } from "@langchain/core/messages"; import { ChatWatsonx } from "@langchain/community/chat_models/ibm"; import { StateGraph } from "@langchain/langgraph"; import { MemorySaver, Annotation } from "@langchain/langgraph"; import { ToolNode } from "@langchain/langgraph/prebuilt"; import wxflows from "@wxflows/sdk/langchain"; import "dotenv/config"; (async () => { // Define the graph state // See here for more info: https://langchain-ai.github.io/langgraphjs/how-tos/define-state/ const StateAnnotation = Annotation.Root({ messages: Annotation<BaseMessage[]>({ reducer: (x, y) => x.concat(y), }), }); const toolClient = new wxflows({ endpoint: process.env.WXFLOWS_ENDPOINT, apikey: process.env.WXFLOWS_APIKEY, traceSession: '...' }); const tools = await toolClient.lcTools; const toolNode = new ToolNode(tools); // Connect to the LLM provider const model = new ChatWatsonx({ model: "mistralai/mistral-large", projectId: process.env.WATSONX_AI_PROJECT_ID, serviceUrl: process.env.WATSONX_AI_ENDPOINT, version: '2024-05-31', }).bindTools(tools);This first part of the code will import the needed dependencies, retrieve the tools from watsonx.ai flows engine, and set up the connection to Mistral Large running on the watsonx.ai platform. Now, let's add the second part of the code for the

index.tsfile, which will handle the creation of the agent:// Define the function that determines whether to continue or not // We can extract the state typing via `StateAnnotation.State` function shouldContinue(state: typeof StateAnnotation.State) { const messages = state.messages; const lastMessage = messages[messages.length - 1] as AIMessage; // If the LLM makes a tool call, then we route to the "tools" node if (lastMessage.tool_calls?.length) { console.log('TOOL CALL', lastMessage.tool_calls) return "tools"; } // Otherwise, we stop (reply to the user) return "__end__"; } // Define the function that calls the model async function callModel(state: typeof StateAnnotation.State) { const messages = state.messages; const response = await model.invoke(messages); // We return a list, because this will get added to the existing list return { messages: [response] }; } // Define a new graph const workflow = new StateGraph(StateAnnotation) .addNode("agent", callModel) .addNode("tools", toolNode) .addEdge("__start__", "agent") .addConditionalEdges("agent", shouldContinue) .addEdge("tools", "agent"); // Initialize memory to persist state between graph runs const checkpointer = new MemorySaver(); // Finally, we compile it! // This compiles it into a LangChain Runnable. // Note that we're (optionally) passing the memory when compiling the graph const app = workflow.compile({ checkpointer }); // Use the Runnable const finalState = await app.invoke( { messages: [ new SystemMessage( "Only use the tools available, don't answer the question based on pre-trained data" ), new HumanMessage( "Search information about the book escape from james patterson" ), ], }, { configurable: { thread_id: "42" } } ); console.log(finalState.messages[finalState.messages.length - 1].content); // You can use the `thread_id` to ask follow up questions, the conversation context is retained via the saved state (i.e. stored list of messages): })();In this second part of the code, the agent is created and we pass a message to the agent: "Search information about the book escape from james patterson". This will kick off a series of interactions between the tools running in flows engine and the LLM in watsonx.ai to provide you with an answer.

To run the above code, you need to add a

startscript to thepackage.jsonfile:"scripts": { "start": "npx tsx ./index.ts", "test": "echo \"Error: no test specified\" && exit 1" }To send the message to the agent you can now run

npm startfrom the terminal, which will return more information about the book "Escape" by "James Patterson". The tool calls will also be printed in your terminal next to the information.The tool call will look like the following:

TOOL CALL [ { name: 'google_books', args: { query: '{\n' + ' books(q: "intitle:escape+inauthor:patterson") {\n' + ' authors\n' + ' title\n' + ' volumeId\n' + ' }\n' + '}' }, type: 'tool_call', id: 'Zg9jXPOR2' } ]Note: watsonx.ai flows engine is using GraphQL as the underlying technology to define and call tools.

And the final response looks something like this:

### Information about the book "Escape" by James Patterson Here is the information about the book "Escape" by James Patterson: - **Authors:** James Patterson, David Ellis - **Title:** Escape - **Volume ID:** EFtHEAAAQBAJ

Next, you'll implement a chat application that uses the flows engine endpoint and the LangGraph agent.

Step 4: Use the agent in a chat application

The final step is to use the agent and the tools endpoint in a chat application, which you can find in this repository.

You can run this application in the browser using the free service StackBlitz:

You can either run the chat application in the browser using StackBlitz or click the Download icon to download the source code for the application and run it locally.

This application will need the same credentials as in step 3, no. 3, above, which you can store in a new .env file, either directly in StackBlitz or locally:

# You can get these credentials from https://dataplatform.cloud.ibm.com/developer-access?context=wx

WATSONX_AI_AUTH_TYPE=iam

WATSONX_AI_ENDPOINT=https://us-south.ml.cloud.ibm.com

WATSONX_AI_IDENTITY_SERVER=iam.cloud.ibm.com

WATSONX_AI_APIKEY=

WATSONX_AI_PROJECT_ID=

# run the command 'wxflows whoami --apikey'

WXFLOWS_APIKEY=

# endpoint shows in your terminal after running 'wxflows deploy'

WXFLOWS_ENDPOINT=

If you want to run the application locally, don't forget to run npm i in the application's directory to install the dependencies. After installing these, you can run npm run dev to start the application.

The chat application is using IBM's Carbon Chat AI component library, and looks like this:

You can now ask any sort of question related to books or other popular media. The agent will use the Google books or Wikipedia tool from watsonx.ai flows engine to answer your questions, and because LangGraph keeps memory state, you can also ask follow up questions.

The next step is to turn your own (enterprise) data into a tool. See the wxflows repository for details.

Summary and next steps

In this tutorial, you've learned how to set up and run an AI agent using IBM watsonx.ai flows engine and LangGraph. You learned how to configure tools for google_books and wikipedia, deploy a flows engine project, and run the application locally or in a browser. These tools will enable your agent to retrieve real-time data and interact with external APIs.

With these new skills, you now have a strong foundation for building AI-powered applications using wxflows and watsonx.ai. Whether you’re creating simple workflows or more complex integrations, the CLI and SDK make it easy to bring your projects to life.

We’re excited to see what you create! Join our Discord community and share your projects with us!