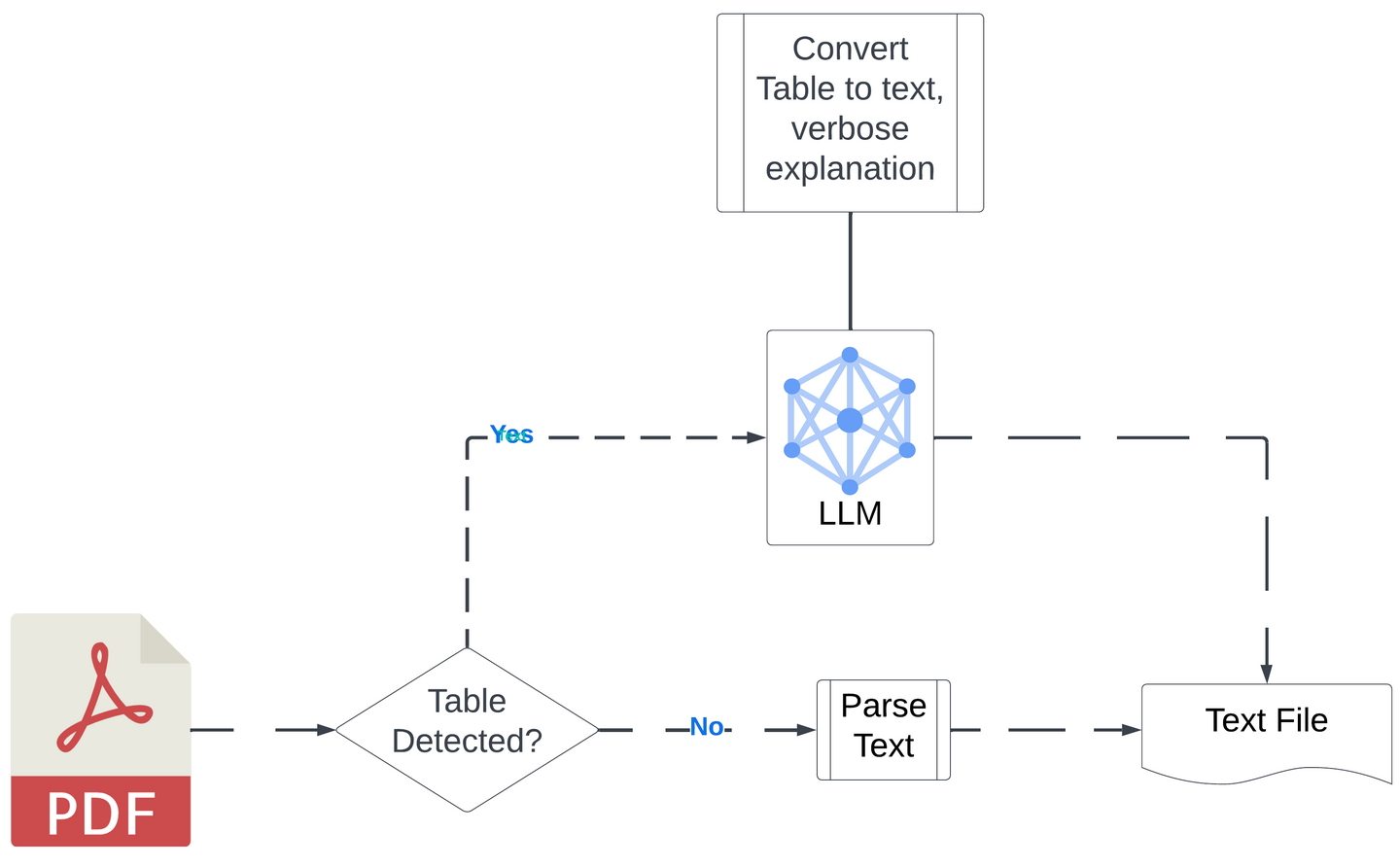

When working with PDfs in a retrieval-augmented generation (RAG) pipeline, a key challenge is efficiently extracting and processing tables. Traditional methods often convert tables into highly normalized formats such as CSV or JSON, which fail to capture the contextual richness needed for effective search and retrieval. These representations break the data down into rows and columns, losing the broader relationships between elements. To address this, I developed a approach that leverages a large language model (LLM) to transform tables into readable text while retaining the context, enhancing the usability of the data in RAG workflows.

This article explains the reasoning behind this approach, the high-level benefits, and the key parts of the notebook.

Why this approach?

Standard table parsing techniques often fail in RAG because they produce highly normalized outputs. While CSV and JSON formats are useful for specific data analytics, they break down in scenarios where more context is needed. Retrieval-augmented generation models thrive on rich, content-heavy data, and having only single rows or minimal data points hinders effective search.

Instead of sticking with the traditional approach of exporting table data in structured formats, I opted to extract the tables, parse them using Azure OpenAI, and reformat the tables into human-readable text. This approach allows for better contextual embedding and enhances searchability without losing the richness of the data.

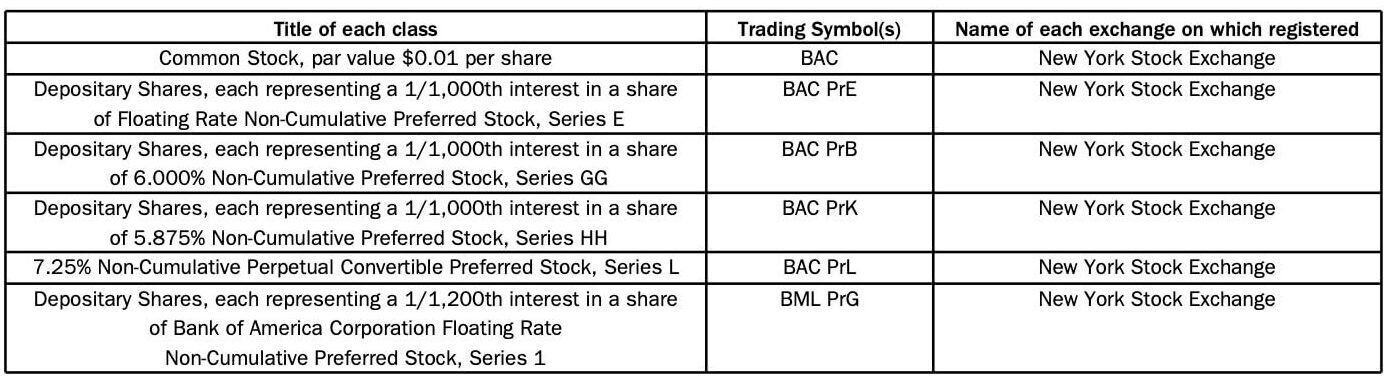

Parsing challenges: A case study with SEC fORM 10-Q

In real-world applications, extracting structured information from PDfs often involves complex tables like the one below from Bank of America's SEC fORM 10-Q.

This table contains critical financial data, such as stock symbols, descriptions, and exchanges. However, parsing such tables presents multiple challenges:

Complexity of table structure

The table includes merged cells, multi-line text, and varying formats for data types like numbers and text. This adds complexity to the parsing process as tools often struggle to identify the correct relationships between the data elements.

Loss of context

When tables are converted to formats such as CSV or JSON, much of the relational context between the table's rows and columns is lost. for example, the relationship between the title of each class and its corresponding trading symbol may not be preserved, impacting the integrity of the extracted data.

Handling special characters and formats

Stock symbols and series names, such as "BAC PrE" and "BAC PrL," may contain abbreviations or special characters that get misinterpreted by traditional parsing tools, leading to inaccurate data extraction.

Addressing the challenge

Using a large language model (LLM) approach, the tables can be transformed into readable text that retains the context of the relationships between rows and columns. This ensures that critical financial information is not lost during parsing and is fully searchable for retrieval-augmented generation (RAG) workflows.

for example, applying this method to the table above outputs the following text:

Table 1 (Page 1) Text Representation:

The following stocks are listed on the New York Stock Exchange:

Common Stock:

Par value: $0.01 per share

Symbol: BAC

Depositary Shares, each representing a 1/1,000th interest in a share of floating Rate Non-Cumulative Preferred Stock, Series E:

Symbol: BAC PrE

Depositary Shares, each representing a 1/1,000th interest in a share of 6.000% Non-Cumulative Preferred Stock, Series GG:

Symbol: BAC PrB

Depositary Shares, each representing a 1/1,000th interest in a share of 5.875% Non-Cumulative Preferred Stock, Series HH:

Symbol: BAC PrK

7.25% Non-Cumulative Perpetual Convertible Preferred Stock, Series L:

Symbol: BAC PrL

Depositary Shares, each representing a 1/1,200th interest in a share of Bank of America Corporation floating Rate Non-Cumulative Preferred Stock, Series 1:

Symbol: BML PrGThe benefits

- Improved searchability: Embedding text instead of highly structured table data ensures that RAG models can capture the relationships and broader context of the content, making it easier to retrieve accurate results.

- Preservation of context: By converting tables into human-readable descriptions, we preserve the intent and structure of the original data, which is essential for RAG workflows where the document's meaning is crucial.

- Handling unstructured data: This method handles the natural unstructured nature of PDfs better than simple table extraction, making it more versatile in real-world applications.

- Readable output: Rather than dealing with abstract, normalized data, the final output is in a format that is easier for both humans and machines to interpret.

Key code explanation

1. Extracting text and tables from PDfs

The first step in the process uses the pdfplumber library to extract both text and tables from each page of the PDf.

import pdfplumber

# Open the PDf and extract pages

with pdfplumber.open('path_to_pdf.pdf') as pdf:

for page in pdf.pages:

text = page.extract_text() # Extract plain text

tables = page.extract_tables() # Extract tablesHere, pdfplumber is utilized to extract both plain text and tables from each page of the PDf. It provides a flexible way to handle PDfs and their internal structure.

2. Cleaning and sending tables to Azure OpenAI

After extracting the tables, the script sends a cleaned version of the table data to Azure OpenAI for transformation into readable text. This allows the LLM to create a natural language summary of the table.

def process_table_with_llm(table):

# Clean the table for missing values and prepare the input

cleaned_table = [row for row in table if row]

# Sending to Azure OpenAI for text generation

prompt = f"Convert the following table into a readable text:

{cleaned_table}"

response = azure_openai.generate_text(prompt)

return response['generated_text']The tables are cleaned to handle missing or None values and are then passed to Azure OpenAI, which generates a textual description of the table's contents. This helps retain the table's context in the final output.

3. Writing the final output

Once the text has been generated from the tables and the non-table text has been extracted, everything is written into a single output file. This ensures that both text and table data are available for downstream tasks like search and retrieval.

with open('output_text_file.txt', 'w') as output_file:

output_file.write(text) # Write non-table text

output_file.write('\n\n--- Table Summary ---\n')

output_file.write(table_summary) # Write table summary from Azure OpenAIBy embedding the table summaries alongside the rest of the text, we provide a comprehensive output that is ready for use in RAG applications, ensuring all information from the PDf is preserved in a human-readable format.

Conclusion

By using an LLM to transform tables into readable text and embedding that text back into the original content, this approach significantly enhances the usability of PDf tables in retrieval-augmented generation workflows. It preserves context, improves searchability, and ensures that no valuable information is lost in the normalization process. This method offers a more comprehensive solution for those working with PDf-based data in RAG applications.

Ready to try this out on your own? Start a free trial.

Elasticsearch has integrations for tools from LangChain, Cohere and more. Join our Beyond RAG Basics webinar to build your next GenAI app!